Reliably Assistant

After completing work on an experiment builder, the Reliably team decided to experiment using generative AI write experiments.

Easier experiments

The experiment builder removed a lot of complexity, with Reliably users not needing to write JSON files anymore. Nevertheless, it still let them with over 200 activities (the basic building blocks) to choose from, which can be overwhelming. That's when we decided to experiment with using ChatGPT to write experiments.

Preventing hallucinations

As the corpus of publicly available JSON experiments is quite small (experiments are most often kept private by organizations), ChatGPT had a tendency to invent activities when requested to write experiments.

To workaround this problem, we provide him with the list of activities and their descriptions, as a pre-promt. We also require it to only use activities from this pre-existing pool.

Defining the output

Finally, we specify an output format in JSON, that can be passed to, and parsed by, the front-end interface.

The workflow

-

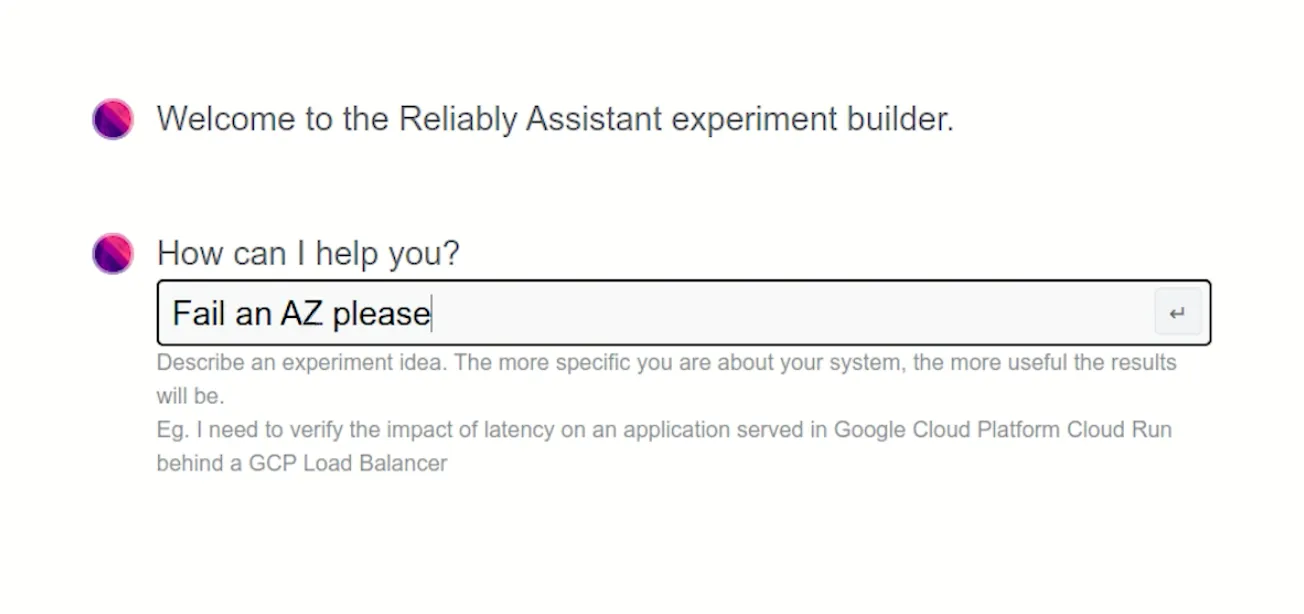

Ask a question

When a user clicks on the Reliably Assistant button, the regular interface is replaced by the Assistant interface, which displays a single field where the user can ask a question.

-

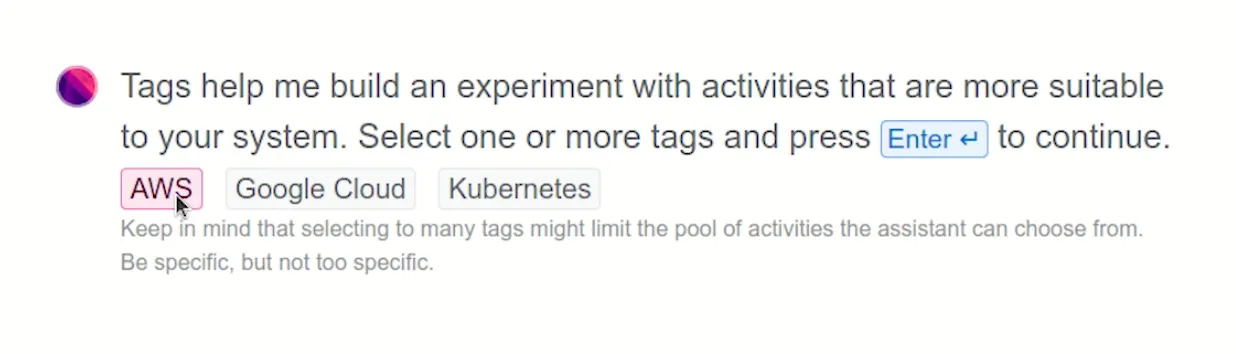

Specify a target system

The Assistant then asks the user to select a target system. It will allow the Assistant to only pick activities that work with the user's system.

Asking the user to select options from a list is both straightforward and more efficient than letting ChatGPT guess it from the original question.

-

Experiment creation

ChatGPT returns its answer as a streamed JSON file. Rather than waiting for the experiment to be complete before displaying it (ChatGPT 4 sometimes takes its time), we display selected activities on the fly, by fetching the result JSON file every 30 seconds, until it's returned as complete.

-

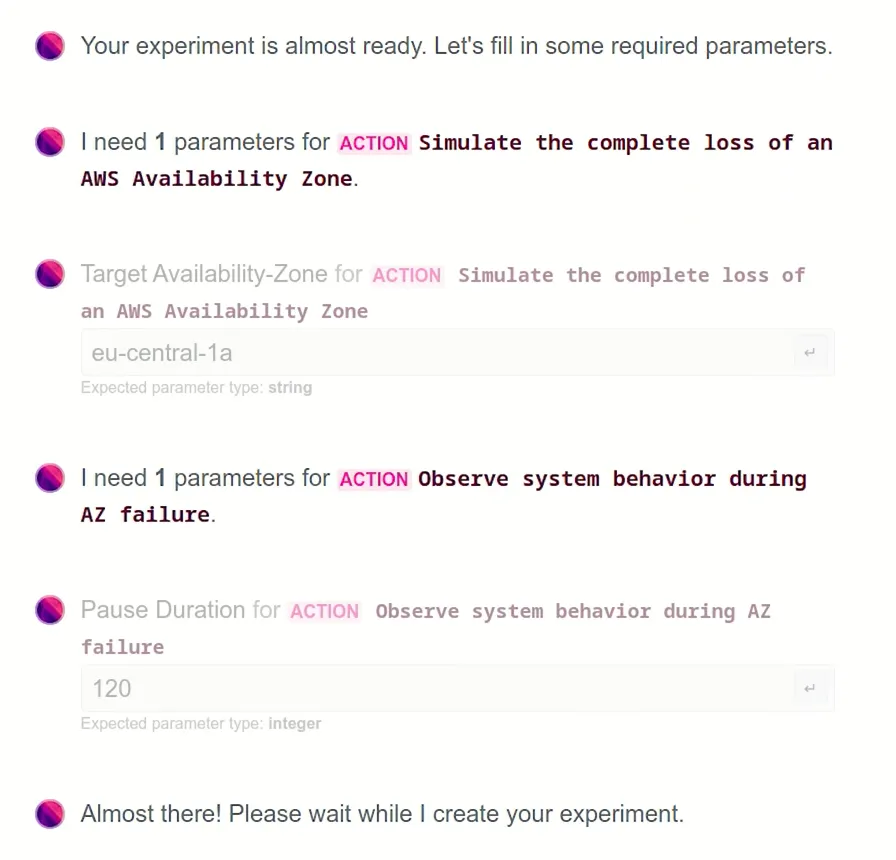

Configuration

The Assistant then prompts the user for required information regarding each activity, such as targets or thresholds.

-

Run

The Assistant can then run the experiment in the cloud, and log its results.

More about Reliably

Reliably was a startup funded by the creators of the open source Chaos Toolkit. Having helped them on branding work, I was asked to join their company, ChaosIQ, when it raised seed funding from Accel in early 2019, to build a full-fledged chaos engineering platform on top of Chaos Toolkit.

Upon years of creating the platform, building in new features, and I worked with various tech stacks, all revolving, for the front-end side, around Vue.js and TypeScript.

The first version of the product, named ChaosIQ, was a dashboard and data platform for Chaos Toolkit. While experiments and execution data were stored locally by Chaos Toolkit, ChaosIQ allowed users to store data and experiments in a same place and share it with their team and within their organization, in order to make chaos engineering beneficial to a larger number of users.

This version of the product was built using TypeScript, Vue.js 2, and Vuex store. This stack was used to build an SPA that fetched data from the APIs of a Python backend.

In 2021, a major change in the product scope, evolving from a chaos engineering platform to an observability platform was the occasion to update the front-end stack, while the back-end went through a major overhaul.

The new product, named Reliably, was built with TypeScript, the brand new (at the time) Vue 3 with the Composition API, and what was not yet the official Vue store, Pinia.. The back-end was written in Go, which honeslty made little difference to me, as my impact on the back-end codebase is limited at best.

The introduction of GitHub Actions allowed switch our build and deployment process from Jenkins. The approachable YAML workflows makes it easy for each team to create and maintain workflows for their own products.

In 2023, we attempted to lift two of the main friction points for users:

- letting them create experiments with a visual interface,

- running experiments without leaving the SaaS product.

To accomodate for a smaller team, and the need for front-end to be manageable by non-(front-end)-specialists, I decided to use Astro, a static site generator we had been using for our website, as the foundation of the new Reliably. Coupled with the very lightweight, framework-agnostic, NanoStores, it serves as the basis for the platform, while all business-logic work can be written using any JavaScript framework, even mixing them it needed. I still decided to use Vue 3, and this new stack proved both performant and developer-friendly, allowing us to build new features at a quick pace.